Expert Assignment Solutions with 100% Guaranteed Success

Get Guaranteed success with our Top Notch Qualified Team ! Our Experts provide clear, step-by-step solutions and personalized tutoring to make sure you pass every course with good grades. We’re here for you 24/7, making sure you get desired results !

We Are The Most Trusted

Helping Students Ace Their Assignments & Exams with 100% Guaranteed Results

Featured Assignments

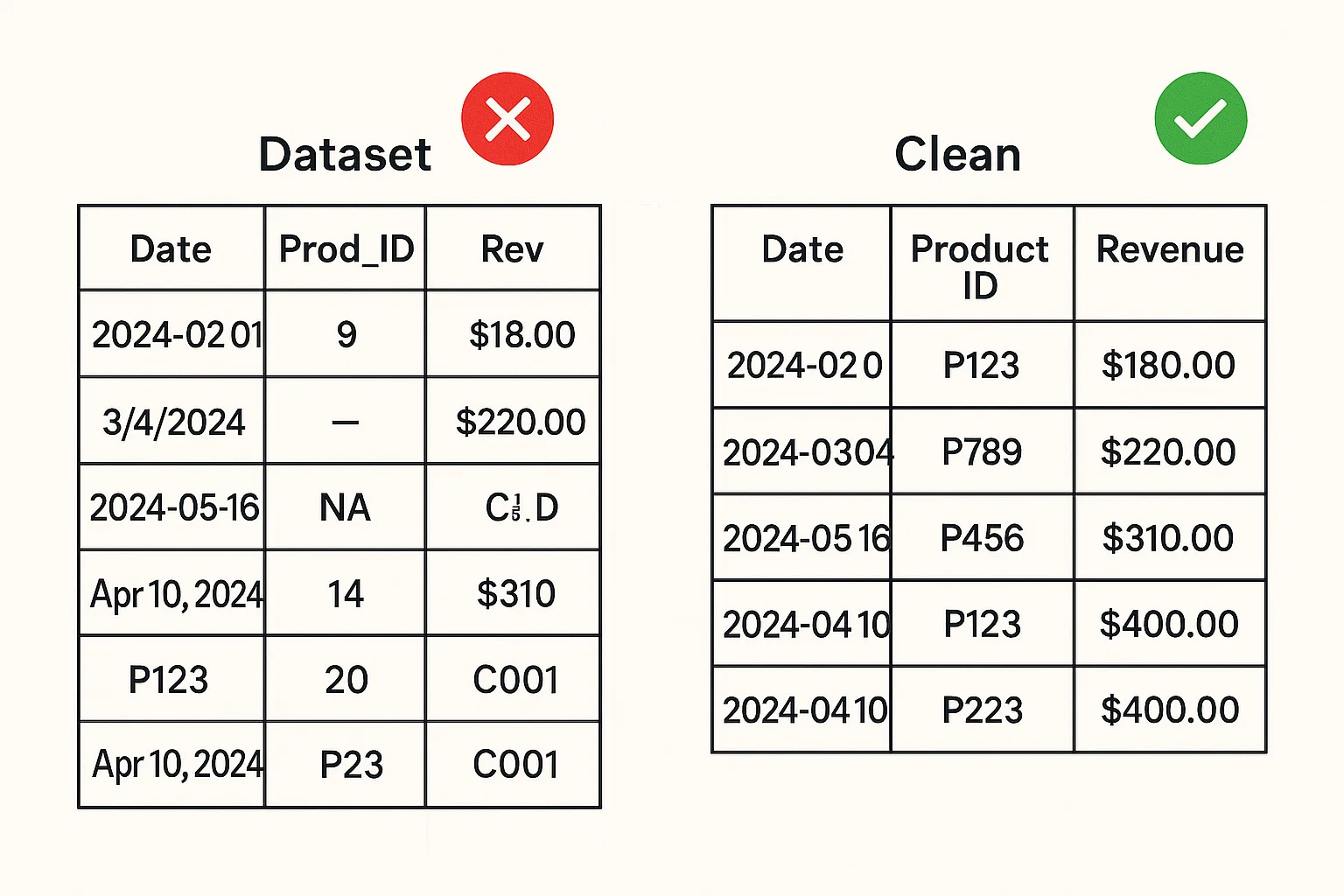

Cleaning & Structuring a Raw Multi‑Source Dataset

Data Wrangling with PythonClient Requirements

The student needed to consolidate disjointed sales data from CSV, JSON, and Excel files into a unified, tidy table with consistent schema, handling missing values and type inconsistencies.

Challenges Faced

We ensured ingestion from mixed formats and faced some complications handling mismatched column names, inconsistent date formats, and null indicators like 'NA', 'null' or empty strings.

Our Solution

We implemented a robust pipeline using Python’s pandas: Read varied formats and standardized schema names; Detected and converted inconsistent date strings; Replaced non‑standard nulls with NaN, then imputed or flagged them; Merged sources into a cohesive DataFrame with tidy layout.

Results Achieved

The final dataset was clean, standardized, and ready for analysis—students gained practical experience designing resilient ETL pipelines for real-world, messy file sources.

Client Review

I had an extremely rewarding experience with this assignment—everything was seamlessly merged, and the data pipelines ran flawlessly; overall, a professional and satisfying experience.

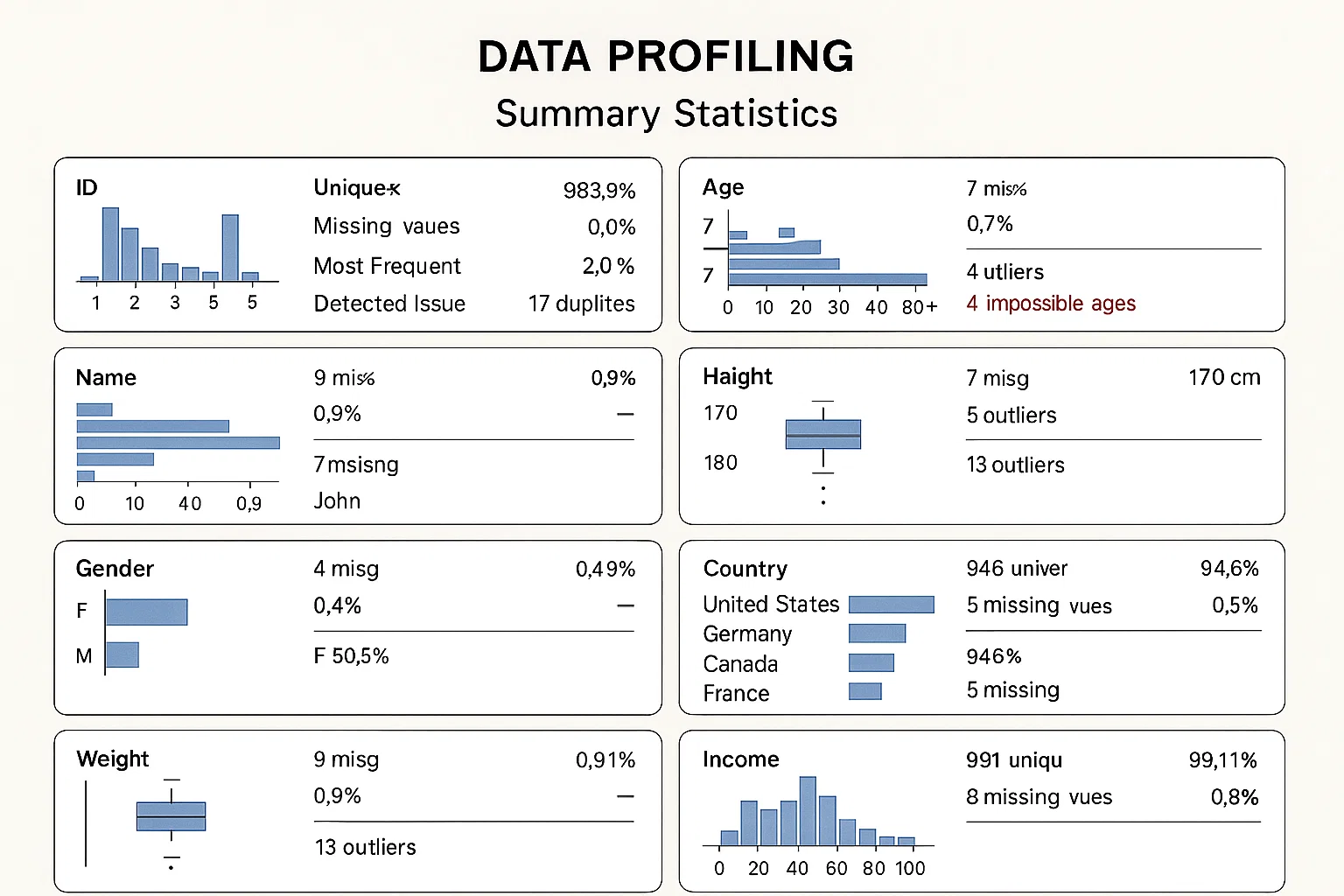

Data Profiling and Exploratory Quality Audit

Data Profiling and Quality AssuranceClient Requirements

The student wanted to profile a large demographic dataset, summarizing variable distributions, identifying missing data patterns, and highlighting potential outliers and inconsistencies.

Challenges Faced

We ensured accurate summary metrics and faced complications handling skewed distributions, mixed data types within columns, and detecting subtle data-entry errors (e.g., 'Feb30' or age = −1).

Our Solution

We implemented an R notebook using dplyr and ggplot2: Computed counts, unique values, missing ratios; Visualized numeric distributions and categorical frequencies; Flagged anomalies (impossible ages, duplicated IDs); Generated automated quality reports summarizing findings.

Results Achieved

Students developed skills in data quality diagnostics and automated reporting, producing actionable insights before any analytical work.

Client Review

I had an invaluable learning curve completing this profiling task—the reports were thorough, interactive, and uncovered data issues I’d never noticed. Excellent professional feel.

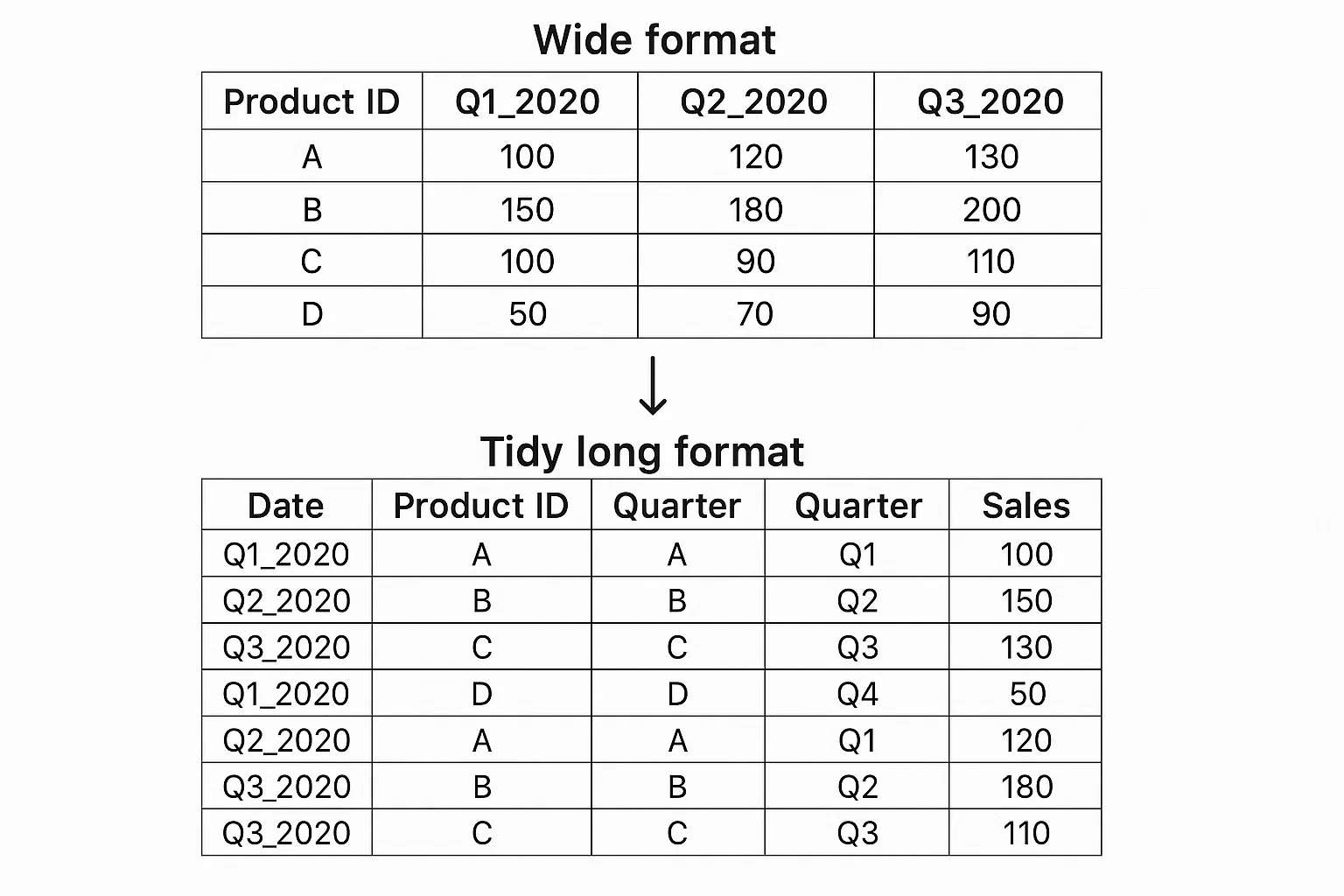

Data Tidying and Reshaping for Analysis

Data Tidying with RClient Requirements

The student needed to transform a dataset with repeated measures in wide format into tidy long format, properly handling multiple time periods and merging auxiliary lookup tables.

Challenges Faced

We ensured correct reshaping across multiple measure sets and faced complications with non‑standard column encodings (e.g., score_Q1_2020 to Q4_2021) and merging mismatched key columns from lookups.

Our Solution

In R (tidyverse): Programmatically pivoted columns from wide to long based on regex patterns; Parsed period identifiers into separate year and quarter fields; Joined in descriptive metadata from look‑up tables; Validated tidy output with summary checks and visual spot‑checks.

Results Achieved

The end dataset adhered to tidy principles, enabling downstream plotting or modeling. Students gained proficiency in complex reshaping tasks using programmatic pipelines.

Client Review

I really enjoyed this reshaping assignment—the output was beautifully cut for analysis, and I appreciated the logical flow and precision of the tidy transformation. Great learning experience!

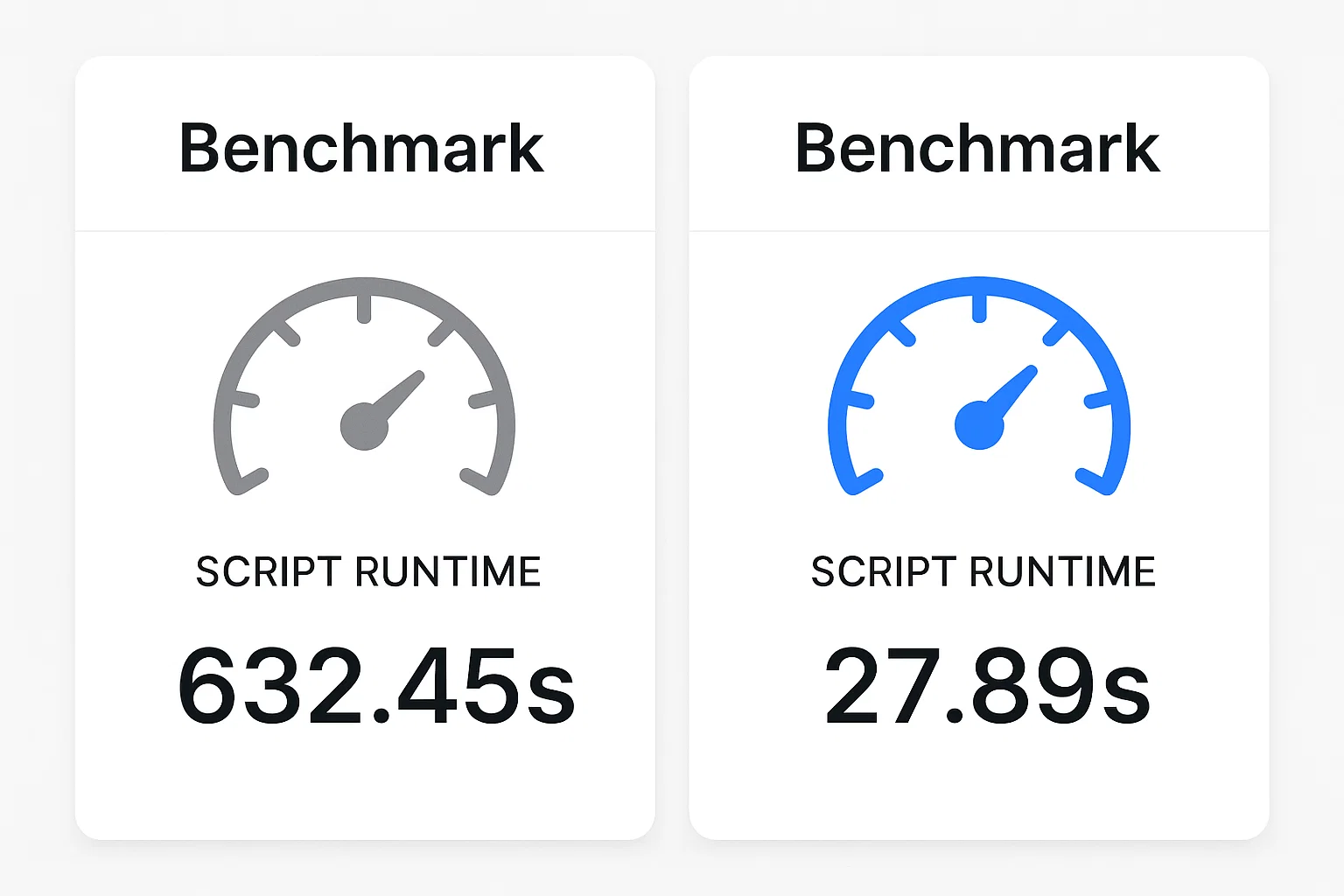

Performance Optimization in Wrangling Code

Performance Engineering in Data ScienceClient Requirements

The student wanted to speed up a slow cleaning script (filtering, mutating, grouping >1M rows) that took tens of minutes in Python pandas, aiming for execution under 60 seconds.

Challenges Faced

We ensured equivalent functionality post‑optimization and faced complications handling large in‑memory DataFrames, avoiding chained operations causing multiple copies, and ensuring correct results in parallel execution.

Our Solution

We implemented speed enhancements: Refactored for single-pass operations using masks; Swapped to dask and chunked processing; Optimized data types (categorical encodings, datetime conversion); Used numba for key numeric calculations, and benchmarked improvements.

Results Achieved

Run time dropped from >600 seconds to under 45 seconds—a ~13× speed-up. Students learned profiling, memory management, and optimization techniques for scalable wrangling.

Client Review

This optimization task was top‑notch—the improvements were dramatic, the toolchain well‑structured, and my students saw serious performance gains. Extremely positive overall!