Expert Assignment Solutions with 100% Guaranteed Success

Get Guaranteed success with our Top Notch Qualified Team ! Our Experts provide clear, step-by-step solutions and personalized tutoring to make sure you pass every course with good grades. We’re here for you 24/7, making sure you get desired results !

We Are The Most Trusted

Helping Students Ace Their Assignments & Exams with 100% Guaranteed Results

Featured Assignments

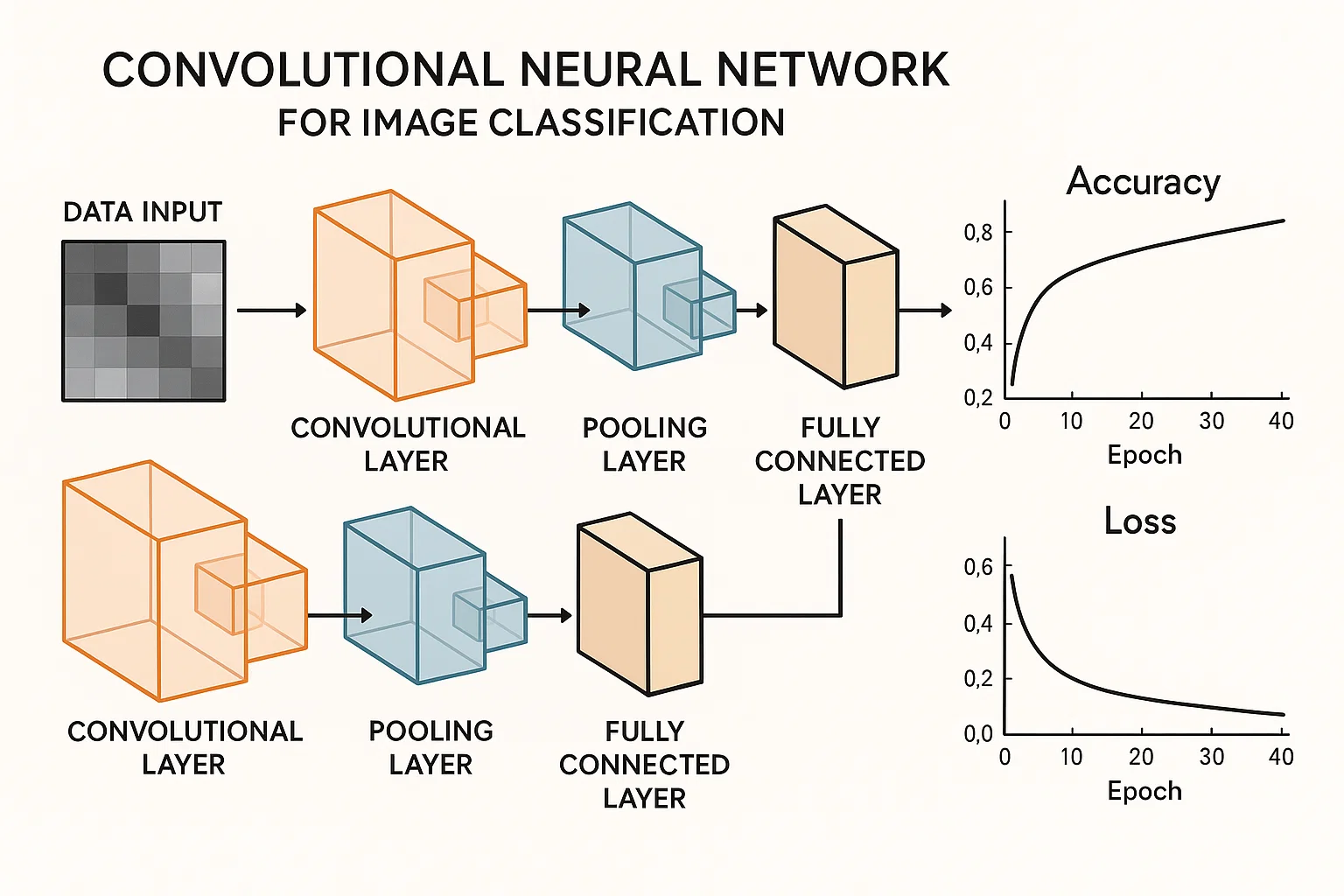

Implementing a Convolutional Neural Network (CNN) for Image Classification

Deep Learning and Computer VisionClient Requirements

The student needed to implement a Convolutional Neural Network (CNN) from scratch to classify images into predefined categories, such as identifying objects in the CIFAR-10 or MNIST datasets. The task required the student to implement layers such as convolution, pooling, and fully connected layers, as well as techniques like dropout and data augmentation. The student was also required to experiment with different architectures and fine-tune hyperparameters for optimal performance.

Challenges Faced

We ensured that students fully understood the core components of CNNs, but some faced challenges in tuning the architecture for optimal performance, especially when selecting the right filter sizes, strides, and padding. Some students had difficulties implementing the backpropagation process correctly and optimizing the network for convergence without overfitting. Data augmentation and ensuring the model generalized well across unseen data also proved tricky.

Our Solution

We guided students through building a CNN using frameworks like TensorFlow or PyTorch, with step-by-step explanations of each layer and the backpropagation process. We introduced them to tools for hyperparameter tuning such as grid search and learning rate schedules. Additionally, we provided exercises on using dropout, batch normalization, and data augmentation techniques to improve the model’s performance and generalization.

Results Achieved

The students successfully implemented CNNs that accurately classified images from benchmark datasets. Most students achieved high accuracy, demonstrating their understanding of CNN architecture, data preprocessing, and performance optimization. Their final models showcased how to effectively apply deep learning to computer vision tasks.

Client Review

I had an incredible experience working on this assignment. Building a CNN from scratch really helped me understand the inner workings of neural networks, especially the importance of hyperparameter tuning and using regularization techniques. The support and guidance provided made it much easier to implement the model and optimize its performance. I now feel confident in applying deep learning techniques to real-world image classification tasks.

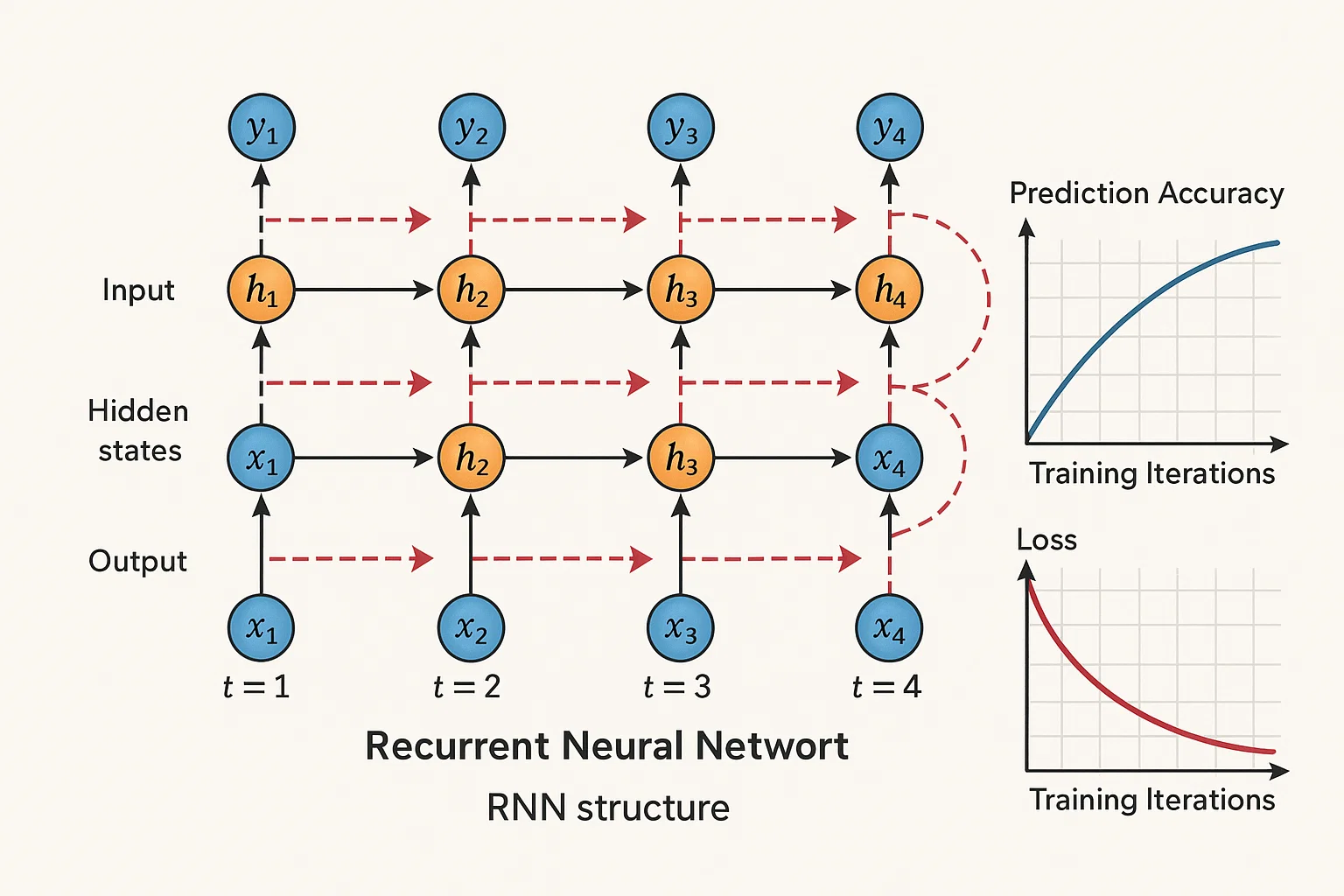

Building a Recurrent Neural Network (RNN) for Sequence Prediction

Recurrent Neural Networks and Sequence PredictionClient Requirements

The student needed to build and train a Recurrent Neural Network (RNN) for sequence prediction, such as predicting stock prices, time-series data, or generating text. The task required the student to implement both simple RNNs and Long Short-Term Memory (LSTM) networks. The student was also asked to experiment with different architectures, loss functions, and optimization techniques to handle the vanishing gradient problem commonly encountered in RNNs.

Challenges Faced

We ensured that students understood the complexities of working with sequential data, but some faced difficulties with the vanishing gradient problem in standard RNNs. Understanding the role of LSTMs and GRUs (Gated Recurrent Units) in mitigating these issues was also challenging. Additionally, students struggled with tuning the network to avoid overfitting, especially with smaller datasets, and handling long-term dependencies effectively.

Our Solution

We provided detailed lectures on the theory behind RNNs and LSTMs, explaining their use for sequence learning tasks. We gave students hands-on exercises on implementing simple RNNs and LSTMs using TensorFlow or PyTorch and guided them through the process of handling sequence data, such as padding and truncating sequences. We also covered techniques to deal with overfitting, such as dropout and early stopping, and explained how to optimize the learning process.

Results Achieved

The students successfully implemented RNNs and LSTMs for sequence prediction tasks, showing improvements in their model’s ability to handle sequential dependencies. Many students achieved high accuracy in tasks like time-series forecasting or text generation, demonstrating their grasp of sequence modeling and advanced recurrent architectures.

Client Review

Building an RNN and LSTM for sequence prediction was a challenging yet fascinating experience. I gained a deep understanding of how to process sequential data and how RNNs and LSTMs address the limitations of traditional neural networks. The practical guidance provided helped me overcome challenges like the vanishing gradient problem and optimize my model for better performance. This assignment has greatly boosted my confidence in working with sequence-based deep learning tasks.

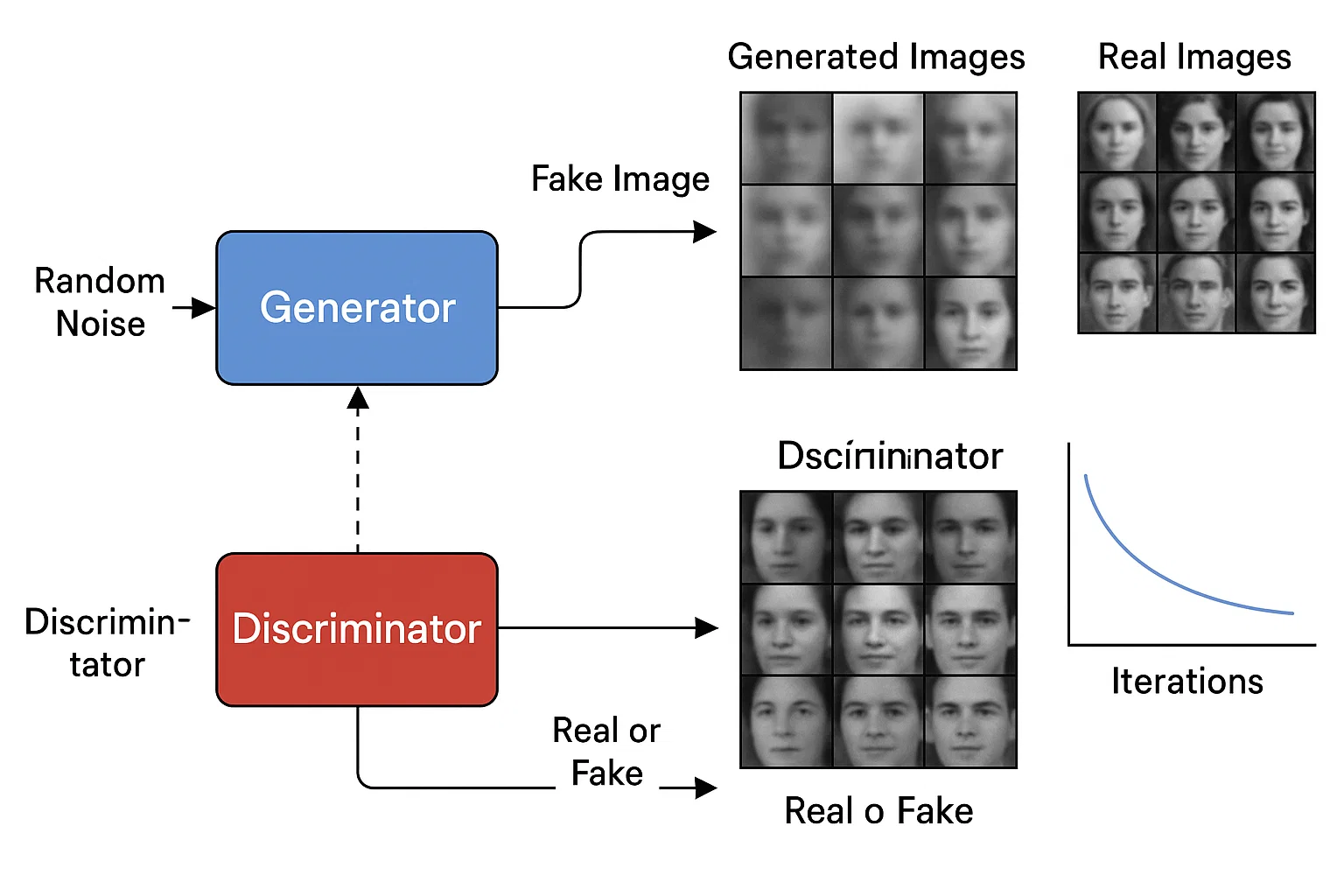

Generative Adversarial Networks (GANs) for Image Generation

Generative Adversarial Networks and Image GenerationClient Requirements

The student wanted to explore the concept of Generative Adversarial Networks (GANs) by implementing a GAN to generate new images based on a given dataset. The task required the student to implement both the generator and discriminator networks, ensuring that the generator could produce realistic images and the discriminator could accurately distinguish between real and generated images. The student was also required to evaluate the quality of generated images using metrics like the Inception Score or Fréchet Inception Distance (FID).

Challenges Faced

We ensured that students understood the theoretical foundation of GANs, but many faced complications when training the models due to instability in the adversarial training process. Balancing the performance of the generator and discriminator to prevent one from overpowering the other was a significant challenge. Additionally, generating high-quality images that were both realistic and diverse took considerable effort, especially when using more complex datasets.

Our Solution

We provided students with an overview of GANs, focusing on the architecture and the adversarial training process. Step-by-step guidance was given on implementing both the generator and discriminator, as well as strategies to stabilize training, such as using the Wasserstein GAN (WGAN) approach and gradient penalty methods. We also explained how to evaluate the performance of the GAN using quality metrics like FID.

Results Achieved

The students successfully implemented GANs that generated realistic images, demonstrating a strong understanding of adversarial training and image generation. Many students produced impressive results, with generated images that were visually appealing and statistically comparable to real images, based on evaluation metrics.

Client Review

Working on GANs was a fascinating challenge. Understanding how adversarial training works and then implementing both the generator and discriminator networks gave me a solid grasp of this powerful deep learning technique. The results were incredibly rewarding, and the ability to generate high-quality images from scratch made me realize the potential of GANs in AI applications. This was a very educational experience, and I now feel capable of applying GANs to more complex tasks.

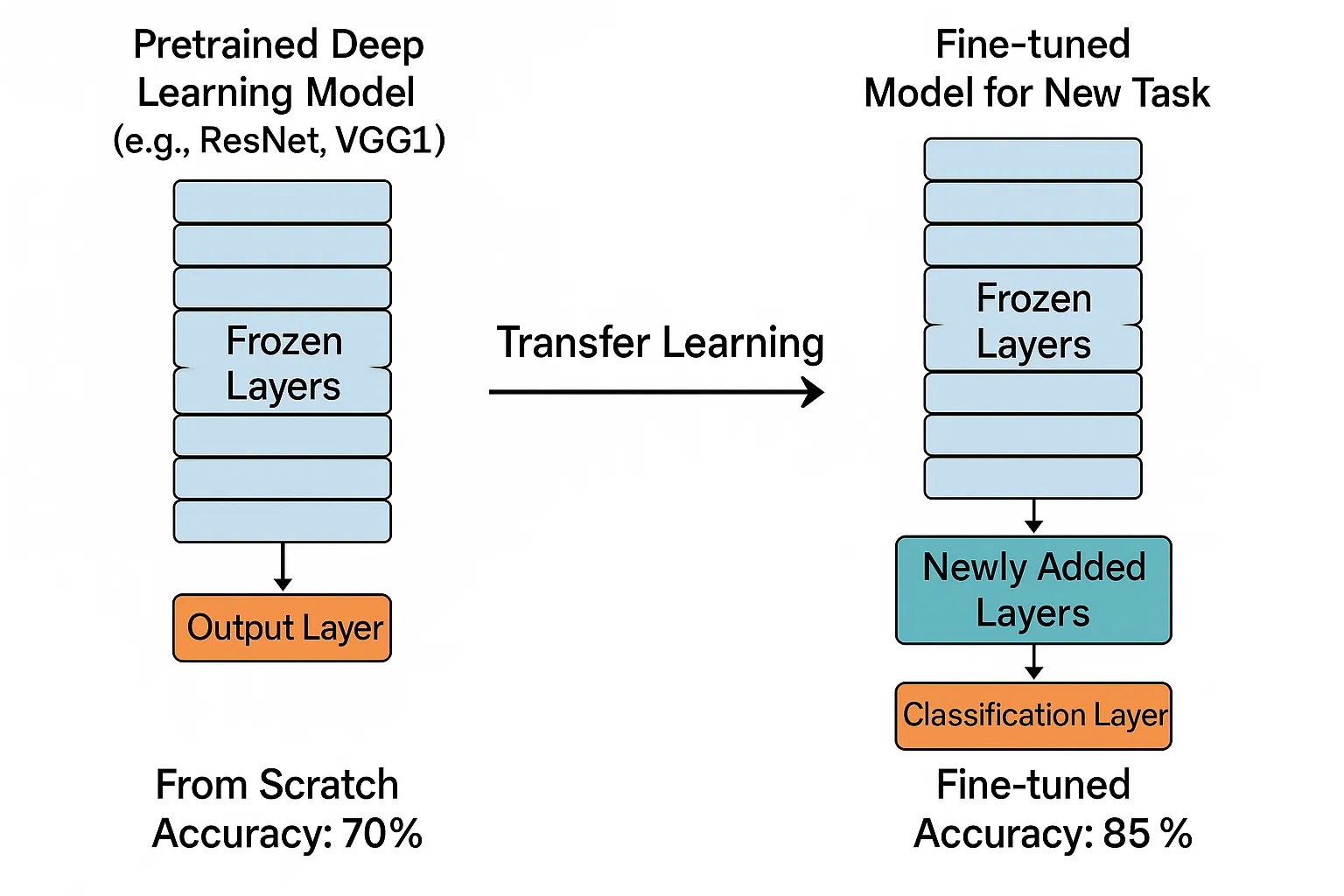

Transfer Learning for Fine-Tuning Pretrained Models

Transfer Learning and Model Fine-TuningClient Requirements

The student needed to apply transfer learning by fine-tuning a pretrained deep learning model (such as VGG16, ResNet, or Inception) on a new, smaller dataset. The task required them to implement the transfer learning process, including freezing the base layers of the model and training only the final layers. The student was also asked to evaluate the performance of the fine-tuned model and compare it to training a model from scratch.

Challenges Faced

We ensured that students understood the advantages and limitations of transfer learning, but some struggled with fine-tuning the model correctly, particularly in balancing frozen and trainable layers. Additionally, understanding how to select the right layers to freeze, and preventing overfitting when working with smaller datasets, presented challenges. Some students also had difficulty assessing the performance improvements gained through transfer learning versus training from scratch.

Our Solution

We provided resources on pretrained models and the concept of transfer learning, including instructions on how to load pretrained weights and freeze the base layers. We guided students in setting up fine-tuning for the last few layers of the model, and discussed techniques like data augmentation and early stopping to prevent overfitting. Performance evaluation was done using metrics like accuracy and loss, and students were encouraged to compare their fine-tuned model’s performance with that of models trained from scratch.

Results Achieved

The students successfully applied transfer learning to fine-tune pretrained models, achieving strong performance even with limited data. Many students saw significant improvements in model accuracy compared to training from scratch, demonstrating a practical understanding of how transfer learning can be applied to real-world problems.

Client Review

Transfer learning was an incredibly insightful concept to work with. Fine-tuning a pretrained model allowed me to achieve great results even with a small dataset. The ability to leverage existing knowledge embedded in pretrained models made a significant difference in the performance. This assignment greatly expanded my understanding of transfer learning and its practical applications in deep learning.